The cognitive bias known as the streetlight effect describes our desire as humans to look for clues where it’s easiest to search, regardless of whether that’s where the answers are.

For decades in the software industry, we’ve focused on testing our applications under the reassuring streetlight of GitOps. It made sense in theory: wait for changes to the codebase made by engineers, then trigger a re-test of your code. If your tests pass, you’re good to go.

But we’ve known for years that the vast (and growing) majority of changes to an application come from outside the codebase – think third-party libraries, open source tools, and microservices galore. Your application is no longer only comprised of code you or your team wrote and control.

GitOps was already broken. Adding AI to the mix is the final nail in the coffin.

Why, then, when your application breaks are you searching for the issue in your lines of code, knowing full well that most of your changes are coming from beyond the repository? This is our modern-day streetlight effect in action, and it’s a recurring reality for all software teams.

However, AI is here to solve everything, right? Maybe, but the path to AI Nirvana is littered with complexity. If we thought GitOps was the answer to all of life’s breaking changes, what do we do when models, training datasets, and evaluations don’t fit into the repo? Whereas a few years ago, managing reliability and availability purely through a VCS was an adorable but conceivable dream, now it’s pure fantasy.

GitOps was already broken. Adding AI to the mix is the final nail in the coffin. The time has come, and we can’t pretend anymore: we must expand the way we’re thinking about change in the world of software.

A brave new world

Imagine you’ve built a cool new feature using an LLM. You pick a model, call an API on Hugging Face or OpenAI, and then you build a nice user interface on top of it. Voilà! You just created a chatbot that answers questions and enables a delightful experience for your customers! However, you’ll inevitably hit a wall as you quickly realize your new app is super expensive, un-testable, and a recipe for on-call wakeups.

Why? Because the way we build, test, and release AI-enabled software is different. It’s one thing to experiment with how to bring AI into your product, it’s another thing to implement it in production, at scale, and with confidence. What was a fun experiment is now just another thing that can bring down your product.

How can you know that the model is changing?

How can you ensure that the right answer is provided to your customers?

The stakes are high. Engineering organizations at this very moment are facing tremendous pressure from the market, from leadership, from their boards, to “smartify” all the experiences in the product using GenAI. For the first time in a long time, the business side of the house is an equal partner in driving technology adoption.

This wasn’t the case with other recent technological waves like CI/CD, Kubernetes, or Docker. These were engineering practices and tools that made it easier to drive business outcomes. Now it’s business folks who are catching the magic and putting pressure on their teams to jump in.

And once they do? Eng teams adding AI to their production-scale applications are about to hit a wall. They’ll take one step and realize they cannot make this work fit into a normal CI/CD flow – because testing software in an AI world has taken on entirely new dimensions.

How confident is confident enough?

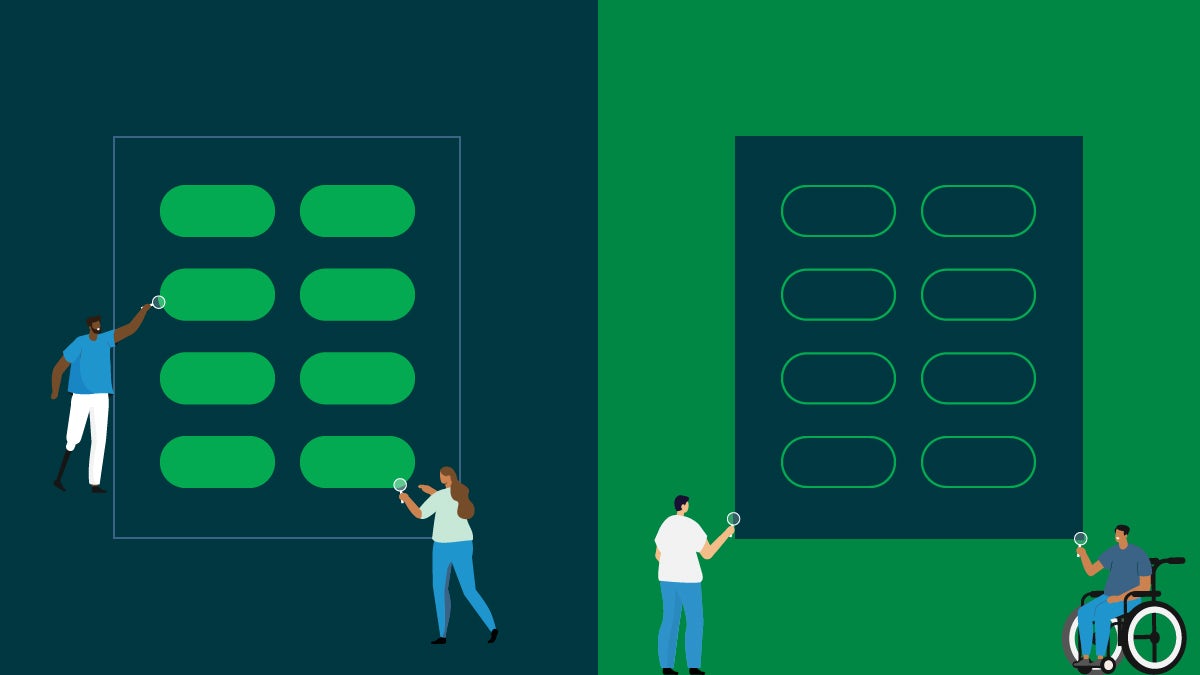

In an AI-enabled piece of software, there’s a model, testing datasets, and evaluators. All of these can change. For example, a dev could say “I’d like to refine how I prompt the model in order to improve my feature.” So they begin by swapping out one prompt for a new and improved one. That single change means a whole new set of tests needs to be run. Is the output still ethical? Precise? Accurate? The qualification of what a good answer should be on an LLM has to be requalified to make sure it’s still valid. But the outputs are never deterministic — so how do you determine the confidence level?

The way you test AI-powered software is not the way you test deterministic software.

The way we test will no longer be “If I call this function, I know I’ll retrieve an output of 0.” It now becomes, “Well, if I prompt with this type of context, I should receive something like this other value.” We’re now dealing in confidence scores, not binary answers: does it fall within a threshold of acceptability? If so, carry on.

Testing in an AI-flavored universe must follow a data science approach: Take X prompt, call the model, evaluate the answer, use a 2nd LLM to evaluate my own evaluation, etc. It gets complicated fast.

Pipeline triggers for the way you build today

At CircleCI we’ve purposely been making our core platform more agnostic from the VCS, so we can support change from anywhere. We started by expanding support from GitHub to Bitbucket and GitLab. Were we anticipating the AI explosion enabled by OpenAI and ChatGPT?

I wish the answer was yes. Our goal was to validate changes like package version changes or Xcode image updates. But the explosion of AI has made it more important than ever to be able to build, test, evaluate, deploy, train, and monitor applications.

If you are solving the software delivery problem by building a new VCS in 2023, you’re probably doing it wrong. If you’re trying to build AI-enabled apps with the repo as the foundation of all change, well, I have a bridge to sell you.

How are we making AI-powered software a reality? One that provides even more value to customers, while simultaneously reducing risk?

-

We’re adding AI evaluations as a component of jobs on CircleCI.

-

We’re enabling inbound webhooks so customers can trigger CircleCI pipelines from anywhere, including from Hugging Face model updates or dataset changes.

-

Where an inbound webhook isn’t simple enough, we’re building improved integrations.

We’re paving the road to bring you confidence developing AI-powered software. What you do from there is up to you.